Introduction

Building production-grade LLM applications means more than just getting answers – it’s about understanding, debugging, and optimizing your chains, agents, and RAG workflows. That’s where Langfuse comes in: a modern observability platform for LLMs, tracing every step and making your AI systems transparent and tunable.

In this post, I’ll show you how the GenAI Starter project makes Langfuse integration a breeze – whether you’re running locally or in the cloud.

Why Observability Matters for LLMs

- Debugging: See exactly how your chains, retrievers, and agents behave.

- Performance: Identify bottlenecks and optimize prompts or model selection.

- Transparency: Trace every step, every tool call, and every intermediate result.

- Collaboration: Share traces with your team for faster iteration.

Plug-and-Play Langfuse Integration

The GenAI Starter repo uses Docker Compose profiles to make Langfuse optional and easy to enable. Here’s how it works:

1. Opt-In with a Single Command

Want observability? Just run:

docker compose --profile=langfuse up -dThis spins up all Langfuse services (web, worker, DB, Redis, Minio, Clickhouse) alongside your LLM stack.

2. Flexible Configuration

Add your Langfuse credentials to .env – local or cloud, your choice:

Local Example:

LANGFUSE_SECRET_KEY=sk-lf-...

LANGFUSE_PUBLIC_KEY=pk-lf-...

LANGFUSE_BASE_URL=http://host.docker.internal:3001Cloud Example:

LANGFUSE_SECRET_KEY=sk-lf-... # from your cloud dashboard

LANGFUSE_PUBLIC_KEY=pk-lf-... # from your cloud dashboard

LANGFUSE_BASE_URL=https://cloud.langfuse.comIf you leave these unset, Langfuse is disabled – no errors, no fuss.

3. Zero-Friction Code Changes

Langfuse is injected as a callback handler into every example chain, agent, and retriever. Here’s the pattern:

# In src/app.py

if langfuse_enabled:

langfuse_handler = CallbackHandler()

else:

langfuse_handler = None

# Passed to every example:

handle_arxiv(st, model_name, langfuse_handler=langfuse_handler)And in your chains:

result = chain.invoke({"question": search_query},

config={"callbacks": [langfuse_handler] if langfuse_handler else None})No global imports, no code duplication – just clean dependency injection.

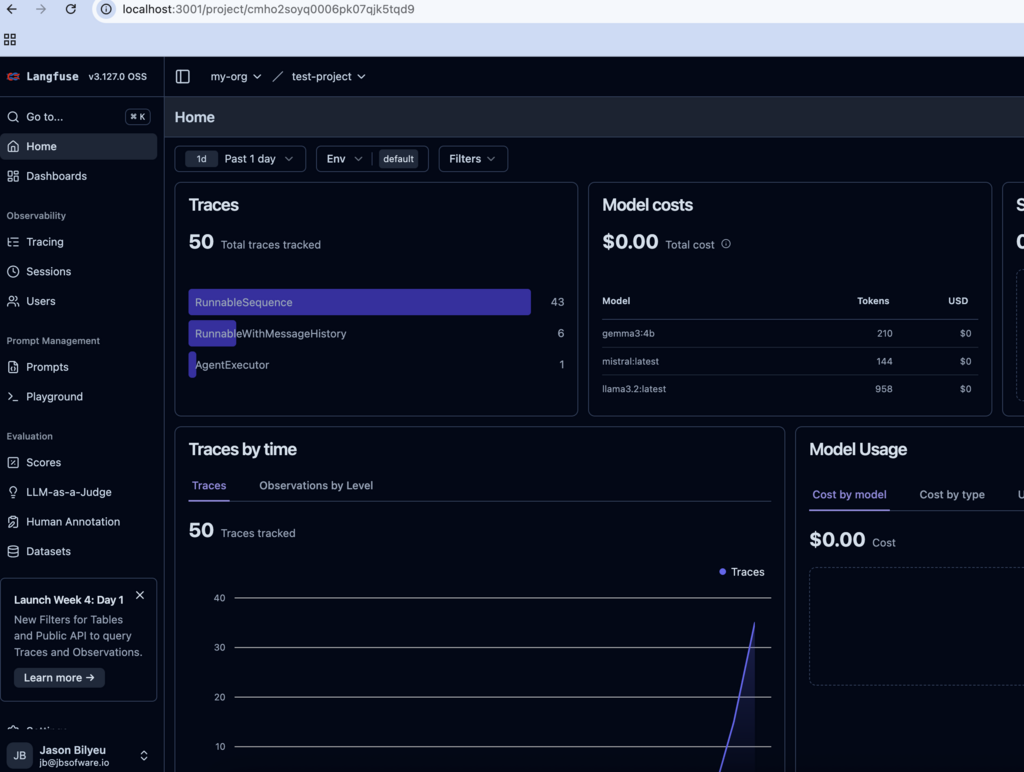

What You Get: Real-Time Tracing

With Langfuse enabled, every LLM call, chain step, and agent action is logged and visualized:

- Trace Graphs: See the full execution path, including intermediate steps.

- Prompt & Response Logging: Inspect inputs, outputs, and errors.

- Performance Metrics: Track latency, token usage, and more.

Open http://localhost:3001 (or your cloud dashboard) and watch your app in action.

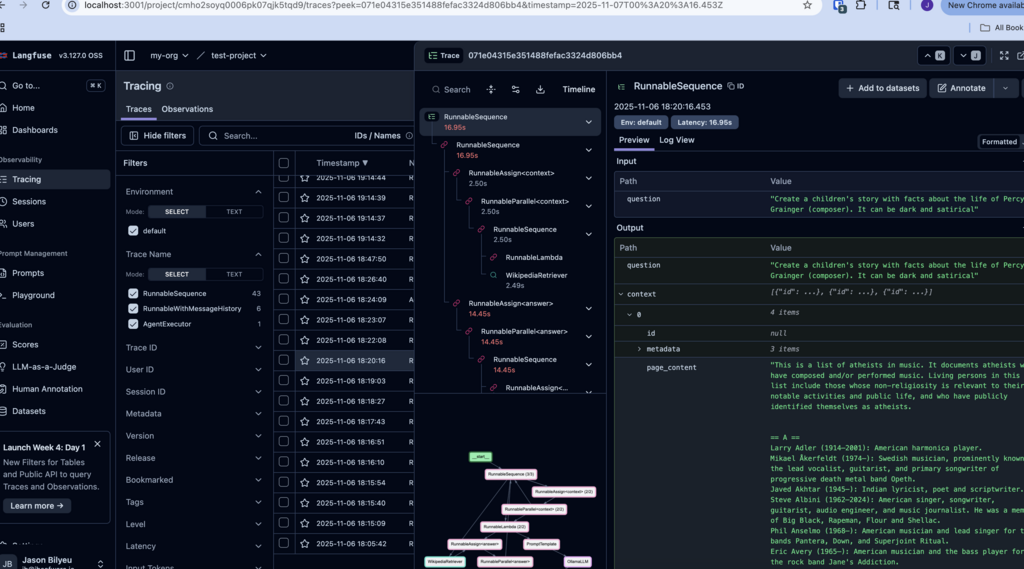

Example: Debugging a RAG Chain

Suppose your RAG workflow isn’t returning good answers. With Langfuse, you can:

- See which retriever was called and what docs were returned.

- Inspect the prompt sent to the LLM.

- View the final answer and all intermediate steps.

No more guessing—just actionable insights.

Conclusion

Langfuse turns your LLM app from a black box into a transparent, debuggable system. With GenAI Starter, adding observability is as simple as flipping a switch – no refactoring, no headaches.

Ready to level up your LLM workflows?

Clone GenAI Starter, enable Langfuse, and start building with confidence.

Further Reading:

Leave a Reply