In the ever-changing world of software development, one constant has remained: the need for effective testing strategies. For decades, testers have employed various methodologies to ensure the quality and reliability of their applications. From Waterfall’s rigid approach to Scrum’s adaptive philosophy, the testing landscape has undergone significant transformations.

At the heart of this evolution lies a fundamental shift in how we think about software testing. What was once a secondary, last-minute concern has become an integral part of the development process. As Agile methodologies gained popularity, testers began to rethink their approaches, embracing a more iterative and collaborative mindset.

In this blog post, we’ll take a journey through the history of software testing strategies, from the rigid frameworks of old to the dynamic, adaptive models of today. We’ll explore three pivotal evolution stages:

- Waterfall Methodology: A bygone era of linear development, where testing was often tacked on as an afterthought. Manual testing reigned supreme, with massive test plans that were obsolete before being run. Automation engineers scrambled to continue adding more hard-to-maintain, flaky browser tests with not many other lower-level tests preventing functional regressions.

- Agile Testing Pyramid: A monumental paradigm shift that introduced a more iterative and collaborative approach to testing, with a focus on rapid feedback loops and continuous improvement. Automated testing was integrated into the process more than ever before. Developers valued having test coverage over their code, and quicker feedback loops improved efficiency.

- Test Automation Diamond: Building on the Agile Testing Pyramid, this proposed model incorporates modern software frameworks’ capabilities, such as Inversion of Control (IoC) and containerization technologies, to enable a large set of useful integration tests. The model also proposes implementing a set of deployed API endpoint tests, significantly enhancing overall testing efficiency and effectiveness.

Join us as we explore the past, present, and future of software testing strategies, and discover how you can apply these insights to elevate your team’s quality, efficiency, and success in today’s fast-paced development landscape.

Waterfall Methodology (Pre-Agile, 2000s)

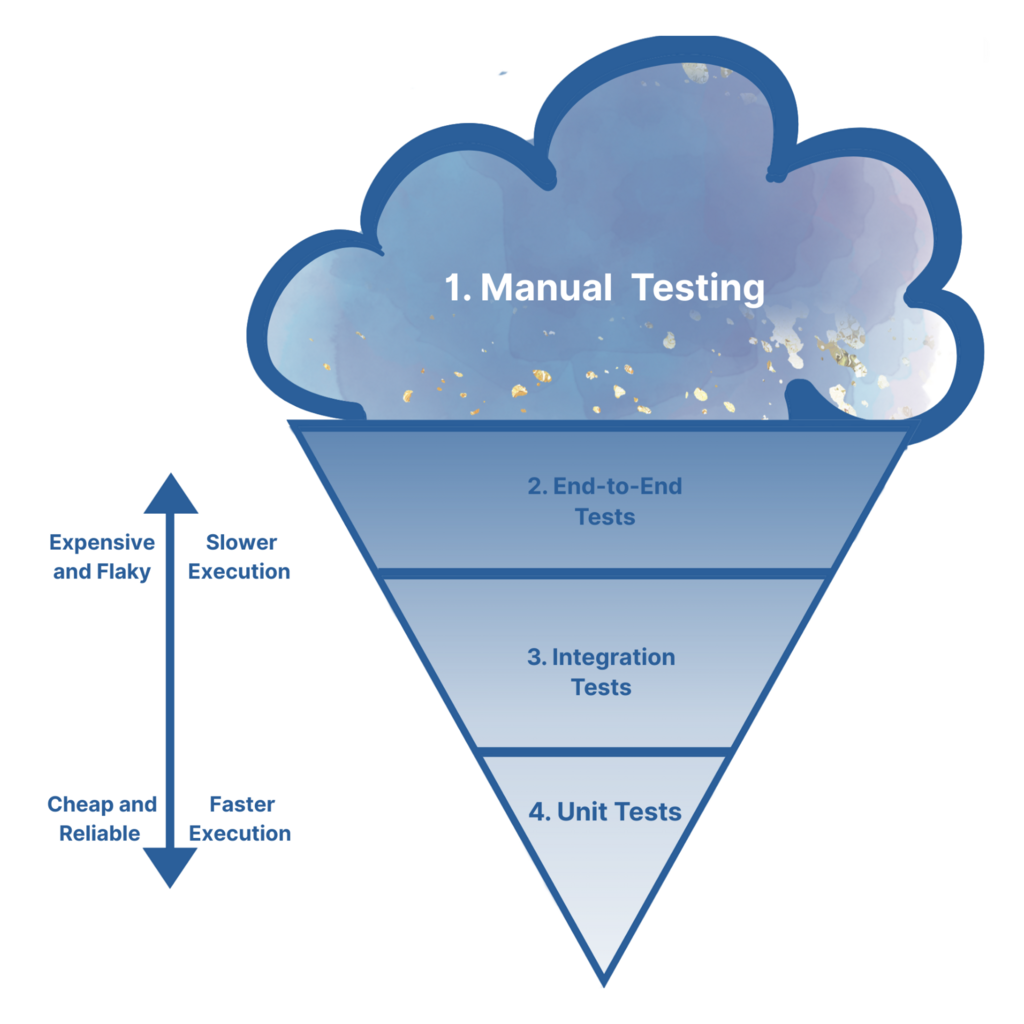

The Waterfall process led to the ’Ice Cream Cone’ anti-pattern, an ineffective testing strategy characterized by an unbalanced allocation of testing efforts.

This pattern is marked by the following features:

- Manual Testing: A large amount of manual testing is regularly performed, and much of it is never automated.

- End-to-End Tests: A large and difficult to maintain number of Winrunner, QTP, or Selenium tests are written on top of the manual testing, often tests that should be covered at a lower level (like many variations of simple scenarios).

- Integration Tests: Only a few integration tests are written to verify the various components together.

- Unit Tests: Very few unit tests are performed to ensure individual component correctness.

This anti-pattern was prevalent for teams using Waterfall processes for several reasons:

- Lack of Automation: Manual testing, although necessary, can be time-consuming and prone to human error.

- Over-reliance on End-to-End Testing: Relying too heavily on end-to-end tests can lead to a narrow focus on web application testing, neglecting other areas like mobile or API testing. Furthermore, the delegation of test automation responsibilities to separate QA teams can perpetuate this imbalance.

- Insufficient Integration Testing: Failing to thoroughly test interactions between components can lead to unexpected behavior or errors in the system.

- Inadequate Unit Testing: Insufficient unit testing can hinder the ability to ensure individual component correctness and detect regressions early, leading to a “throw-it-over-the-wall” approach where engineering teams delegate manual verification and sometimes automation to QA teams.

This led to inefficiencies in the development process and was detrimental in the following ways:

- Increased Testing Time: Manual testing can be lengthy, while Selenium tests may consume significant resources and require re-runs, resulting in increased testing time.

- Reduced Test Coverage: The lack of strategic planning in end-to-end testing and integration testing can lead to uncovered defects or issues.

- Hard to Maintain Test Suite: A large number of manual and end-to-end tests can make it difficult to maintain the test suite over time.

- Inadequate Debugging Capabilities: Insufficient unit testing can hinder debugging efforts, as developers may struggle to identify issues at a lower level.

- Slower Developer Feedback Loop: Because of all of the reasons above, issues take significant time to be identified and assigned, and developers have context switched to other tasks/features by the time they are identified.

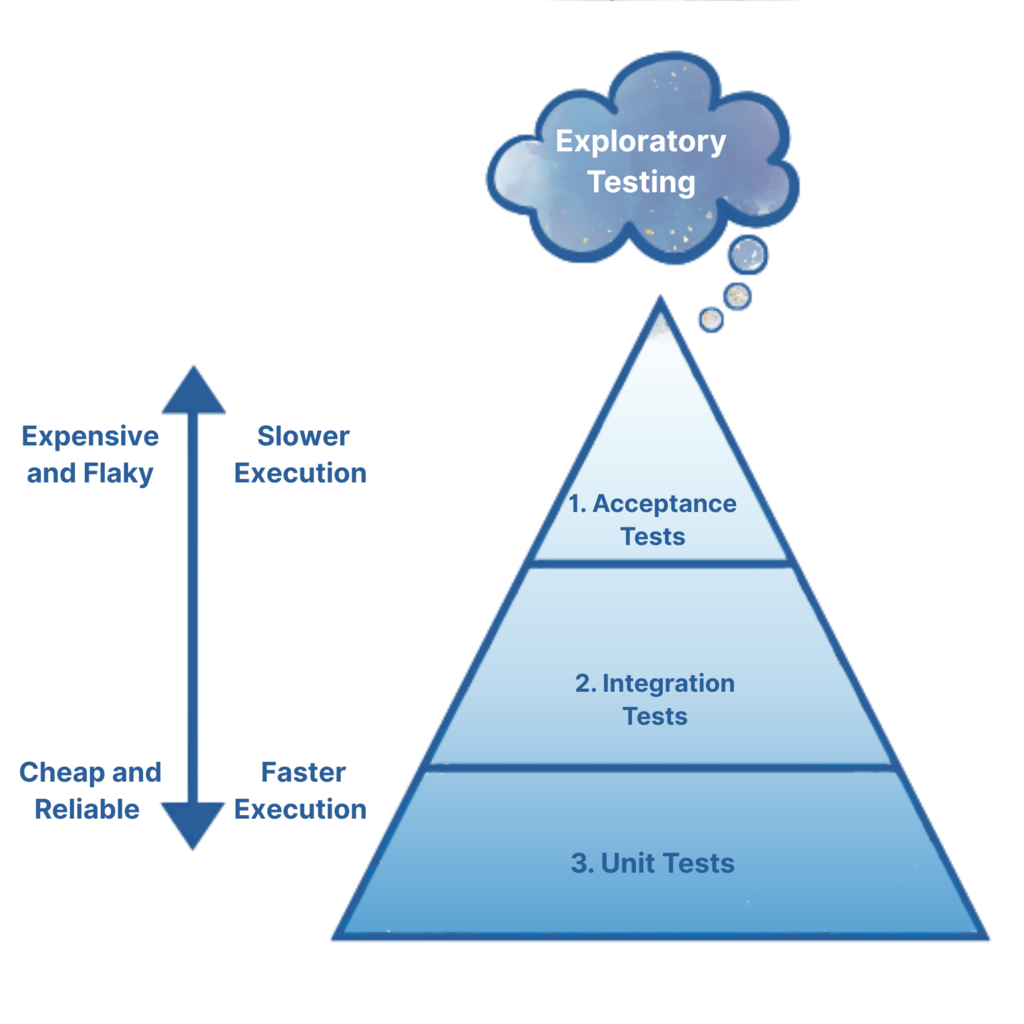

Agile Testing Pyramid (2000s-2010s)

The Agile Testing Pyramid is a testing strategy that emerged in the context of Agile software development methodologies. It’s also known as the “Test-Driven Development (TDD)” or “Behavior-Driven Development” (BDD) approach.

The concept of the Agile Testing Pyramid was first introduced by Mike Cohn, an American software engineer and consultant, in his 2004 book “Agile Estimating and Planning.” However, the idea gained popularity with the publication of Kent Beck’s book “Test-Driven Development: By Example” in 2002. Beck’s book is often credited as a key influence on the development of TDD.

Key principles:

- Test-Driven Development (TDD): Write automated tests before writing the code, ensuring that the code is testable and meets the desired functionality.

- Behavior-Driven Development (BDD): Define the behavior of the system through natural language or domain-specific languages, which are then translated into executable specifications.

The Agile Testing Pyramid consists of three layers:

- Acceptance Tests

- Role: Validate that the software meets user requirements and behaves as expected when used by real users, involving stakeholders in the test definition process to ensure alignment with business objectives.

- Example Frameworks: Selenium, Cucumber (BDD), SpecFlow (BDD)

- Best Practices:

- Use natural language or domain-specific languages when defining acceptance tests to ensure everyone understands the requirements.

- Follow the BDD principles to ensure that your test suite is comprehensive and reliable.

- Integration Tests

- Role: Verify how individual components interact and collaborate within the larger system, emphasizing the cohesion and expected behavior of different parts working together.

- Example Frameworks: jest (Node), pytest (Python), JUnit (Java), NUnit (.NET)

- Best Practices:

- Follow the TDD principles to ensure that your test suite is comprehensive and reliable.

- Unit Tests

- Role: Validate units of code (such as functions or classes) in isolation with a focus on speed, reliability, and comprehensiveness, covering a range of inputs, outputs, and edge cases.

- Example Frameworks: jest (Node), pytest (Python), JUnit (Java), NUnit (.NET)

- Best Practices:

- Focus on a specific area of functionality, rather than trying to cover everything at once.

- Follow the TDD principles to ensure that your test suite is comprehensive and reliable.

Optional but Recommended: Exploratory Testing

- Role: On a regular cadence (end of sprint, monthly, etc) provide additional, manual testing capabilities for specific use cases or edge cases. These sessions are typically loosely structured allowing testers to freely find their own paths through the application.

- Example Frameworks: Variety of Desktop and Mobile Browsers, Bug Tracking Software or Google Sheet to capture findings

- Best Practices:

- Leverage exploratory testing to validate assumptions or uncover hidden issues not captured by automated tests.

- Can be done by dedicated QA and/or Developers can manually test each other’s stories.

- Host a quarterly/bi-annual bug bash for stakeholders and the team during each sprint, offering prizes for the most compelling discoveries.

Benefits:

- Improved Code Quality: Writing automated tests before writing code ensures that the code is testable and meets the desired functionality.

- Reduced Debugging Time: Automated tests help identify issues early, reducing debugging time and improving overall development speed.

- Enhanced Collaboration: TDD/BDD encourages team members to work together, fostering a collaborative environment where everyone understands the system’s behavior.

Limitations with Modern Development Frameworks:

As we adopt modern frameworks like NestJS, Django, or Spring Boot, it’s crucial to acknowledge the limitations of traditional Agile Testing Pyramid approach:

- Over-reliance on Unit Test Mocks: The requirement to mock persistence layers and key dependencies in pure unit tests can lead to complex and hard-to-maintain test suites. This can result in a significant amount of duplicated code and effort, making it challenging to keep pace with changing requirements.

- Code Maintenance and Fragility: With an extensive number of unit tests relying on mocks, changes to the underlying codebase can require simultaneous updates to multiple tests, leading to a fragile testing environment. This can cause delays in test execution, decreased test reliability, and increased maintenance costs.

- Missing Service-level Integration Testing Layer: Modern frameworks like NestJS, Django, or Spring Boot facilitate fast setup of test environments using REST calls, which can be run locally or in CI with Docker and Compose, providing a crucial layer of verification before code merges.

By acknowledging these limitations, we can begin to adapt and refine our testing strategies to better align with the demands of modern frameworks and applications.

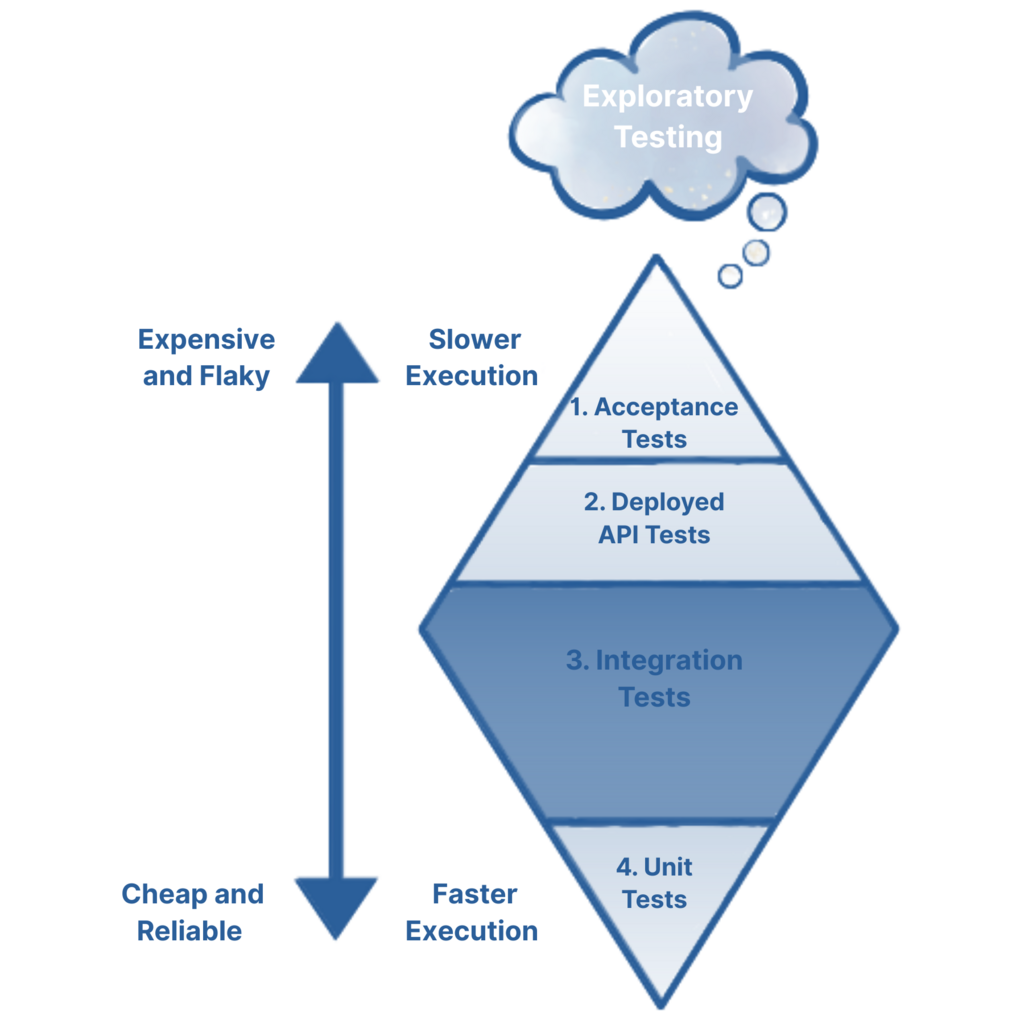

Test Automation Diamond

The Test Automation Diamond is a proposed/new framework for structuring tests in an Agile development environment for teams using “microservice” technologies where each service has defined contracts (typically REST) and can be deployed and tested independently of the system at large. By adapting the Agile Testing Pyramid to these modern software development practices, we can create a more effective and efficient testing strategy.

The diamond consists of four layers:

- Acceptance Tests

- Role: Validate that the software meets user requirements and behaves as expected when used by real users, involving stakeholders in the test definition process to ensure alignment with business objectives.

- Example Frameworks: Cypress, Appium, Selenium

- Best Practices:

- Leverage tools like Cypress or Appium for simulating real-user interactions to ensure comprehensive application functionality.

- Focus on critical business scenarios, cover edge cases with lower-level UI Unit or Integration tests.

- Use these to automate your story acceptance criteria (where appropriate), keep this set of tests lean and deliberate.

- Run these post service deployment in development/integration environments.

- If any tests are failing here, stop the assembly line until resolved.

2. Deployed API Tests

- Role: Ensure the actual deployed API surface behaves as expected when interacting with external services or applications, verifying correct responses, error handling, security protocols, and performance. These tests catch errors early on, ensuring the API’s reliability and scalability meet expectations for production usage.

- Example Frameworks : jest (Node), pytest (Python), JUnit (Java), NUnit (.NET)

- Best Practices:

- Develop a medium-sized battery of headless smoke tests to validate system integration and API correctness upon deployment.

- Focus on exercising all basic CRUD (Create, Read, Update, Delete) functionality possible through the REST endpoints.

- Do not include many extra scenarios here, move those to the Integration Test suite so they provide faster feedback.

- Run these post service deployment in development/integration environments.

- If any tests are failing here, stop the assembly line until resolved.

3. Integration Tests

- Role: Verify how individual components interact and collaborate within the larger system, emphasizing the cohesion and expected behavior of different parts working together.

- Example Frameworks: jest (Node), pytest (Python), JUnit (Java), NUnit (.NET)

- Best Practices:

- Emphasize testing interactions between components to ensure seamless integration.

- Prioritize testing public or entry methods, potentially involving real database scenarios (typically using Docker).

- Target REST endpoints with diverse user personas.

- Run these as part of code pre-merge requirement.

4. Unit Tests

- Role : Verify pure, algorithmic code is correct and functions as expected.

- Example Frameworks : jest (Node), pytest (Python), JUnit (Java), NUnit (.NET)

- Best Practices:

- Focus on testing individual components or units of code for isolation and independence.

- Utilize data-driven tests to concisely evaluate algorithm behavior and document critical functionality.

- Avoid excessive mocking, this is a clear sign to promote these to Integration Tests.

- Run these as part of code pre-merge requirement.

Optional but Recommended: Exploratory Testing

- Role: On a regular cadence (end of sprint, monthly, etc) provide additional, exploratory testing capabilities for specific use cases or edge cases. These sessions are typically loosely structured allowing testers to freely find their own paths through the application.

- Example Frameworks: Variety of Desktop and Mobile Browsers, Bug Tracking Software or Google Sheet to capture findings

- Best Practices:

- Leverage exploratory testing to validate assumptions or uncover hidden issues not captured by automated tests.

- Start with a list of recently modified areas of the code, give the team unfamiliar areas to test and let them try to break the application.

- Host a quarterly/bi-annual bug bash for stakeholders and the team during each sprint, offering prizes for the most compelling discoveries.

When to Write Each Type of Test

Here’s a general guideline on when to write each type of test:

- Acceptance Tests: Write end-to-end automated tests to simulate real-user interactions and ensure that the entire application works correctly. Keep this suite lean and purposeful.

- Deployed API Tests: Write deployed API tests to quickly verify system integration and ensure that the API works correctly in a deployed environment.

- Integration Tests: Write service-level integration tests for typical controller, service, or repository code that interacts with other components or services.

- Unit Tests: Write unit tests for rare cases where algorithmic correctness is crucial.

By adopting the Test Automation Diamond approach, you can create a comprehensive testing strategy that seamlessly integrates modern software development practices, resulting in thorough application testing with rapid feedback delivery to developers and other stakeholders.

What automated testing methodology does your team use? Let us know in the comments!

Do you need help balancing your company’s test strategy? Contact us today!

Leave a Reply